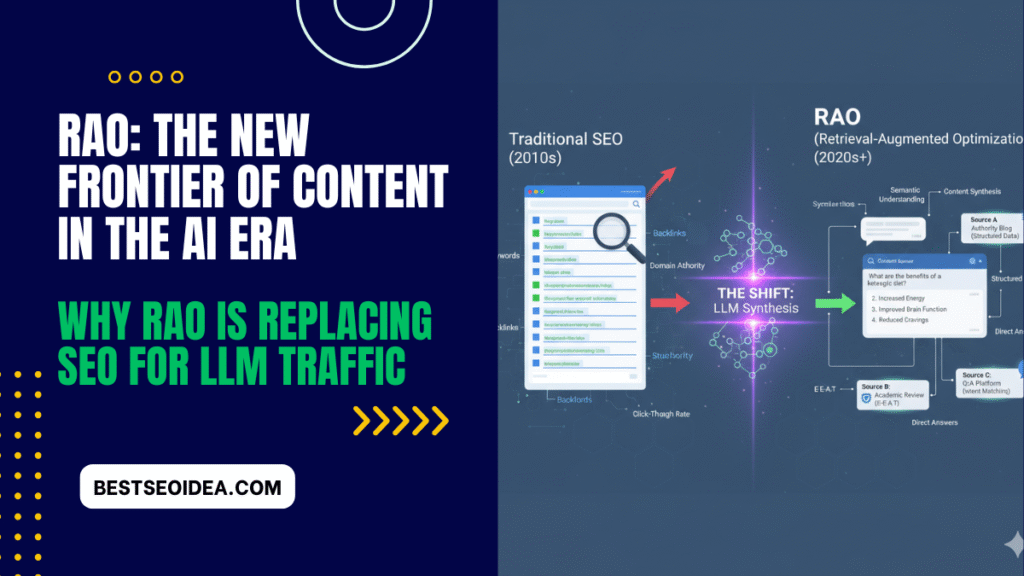

Hi friends, do you know what RAO is and why we should do RAO instead of SEO to get huge traffic from LLMs like ChatGPT, Gemini, and others? The digital world is experiencing a seismic shift in how information is discovered.

For two decades, Search Engine Optimization (SEO) ruled, focusing on getting websites to rank high in a list of “blue links” on Google.

Today, however, a new paradigm is emerging driven by sophisticated Large Language Models (LLMs) like ChatGPT, Gemini, and Claude. This shift mandates a new optimization strategy: Retrieval-Augmented Optimization (RAO).

Table of Contents

What is RAO (Retrieval-Augmented Optimization)?

RAO is an innovative approach to content strategy that ensures your information is not just discoverable by traditional search engines but is retrievable and citable by AI assistants and LLMs.

It is the evolution of optimizing for a list of links (SEO) to optimizing for a direct, synthesized answer that an AI provides.

In short:

- SEO: Optimizing for search engine algorithms to drive human click-through from a Search Engine Results Page (SERP).

- RAO: Optimizing for Retrieval-Augmented Generation (RAG) systems used by LLMs to ensure your content is a trusted, structured, and factual source that is pulled into AI-generated answers.

This distinction is crucial because when a user asks an LLM a question, they receive a single, coherent answer—not a list of ten websites. For your content to get traffic, it must be the source cited in that answer.

Why RAO is Replacing SEO for LLM Traffic

The move from “searching” to “asking” is what drives the necessity of RAO over traditional SEO. Here are the core reasons why a focus on RAO is essential for gaining huge traffic from LLMs:

1. The Death of the “Blue Link”

Traditional SEO’s goal was the coveted #1 SERP spot. LLMs, however, don’t typically provide a ranked list of links; they provide a direct answer. If an AI like Gemini synthesizes an answer for a user, the user is far less likely to scroll down and click a separate website link.

You are either the source the AI cites, or you are invisible. This is often referred to as the “zero-click” phenomenon on steroids—there is no “second page” in an AI’s answer.

2. A Focus on Retrieval Over Ranking

LLMs use a process called Retrieval-Augmented Generation (RAG). This system first retrieves highly relevant, factual information from its knowledge base (which includes external websites), and then the LLM generates a final response based on that retrieved data.

- SEO focuses on factors like backlinks, page speed, and keyword density to rank a page.

- RAO focuses on making content structured and trustworthy enough for the RAG system to retrieve it. This means prioritizing structured data (like Schema), fact-based content, and clear, concise answers over long, keyword-stuffed articles.

3. Trust and Factual Consistency are the New Authority

LLMs are highly focused on accuracy and avoiding “hallucinations.” They prefer to retrieve information from sources they deem authoritative, trustworthy, and factually consistent. RAO emphasizes:

- Atomic Answers: Creating small, self-contained sections that answer a single, specific question clearly.

- Entity-Based Structure: Organizing content around real-world entities (people, places, things, concepts) and the relationships between them, which LLMs are designed to understand.

- Verifiable Data: Including original research, clear citations, and timestamps, making your content a high-trust source for the AI.

4. Conversational and Contextual Alignment

Users interact with LLMs conversationally, asking nuanced questions. SEO keywords are rigid; RAO is fluid, optimizing for user intent and semantic relevance. Content must be structured to provide dialogue-friendly answers that fit seamlessly into a generative response, which often favors question-and-answer formats and clear subheadings that directly address user queries.

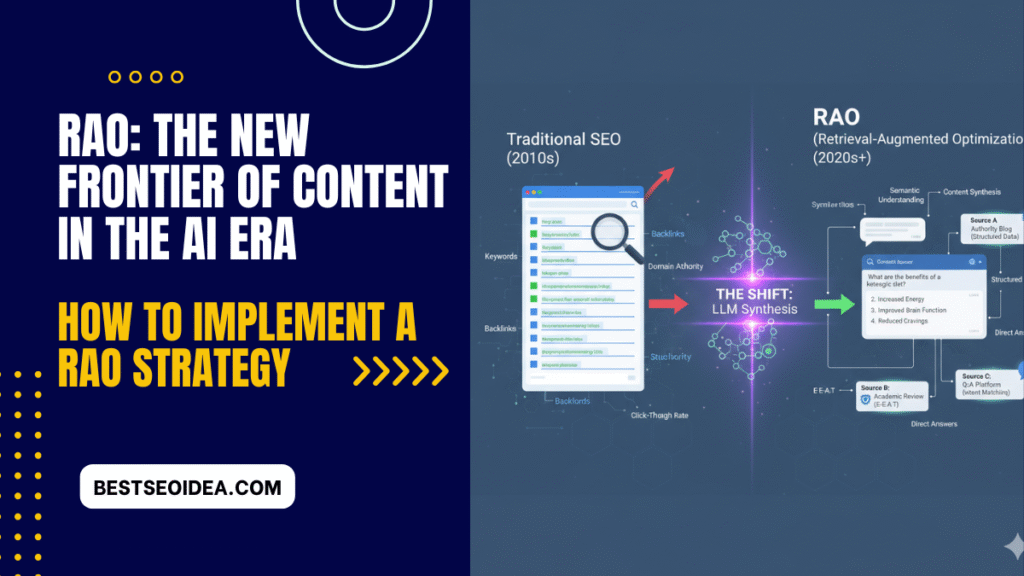

How to Implement a RAO Strategy

Implementing RAO is not about abandoning SEO, but about evolving it. Think of RAO as the future-proofing layer built on a solid SEO foundation.

| Aspect | Traditional SEO Focus | RAO Focus |

| Goal | Rank #1 in SERP listings. | Be cited as a trusted source in AI answers. |

| Content Style | Keyword-dense, long-form for ranking. | Structured, fact-based, concise, and atomic answers. |

| Optimization Target | Google’s ranking algorithm. | LLM Retrieval-Augmented Generation (RAG) system. |

| Technical Priority | Site speed, mobile-friendliness, backlinks. | Structured Data (Schema), Content Chunking, Vector Databases (behind the scenes). |

To begin your RAO journey, audit your content to ensure every piece is:

- Structured with Intent: Use H2/H3 headings that directly pose and answer questions.

- Machine-Readable: Implement Schema markup (especially

FAQPage,HowTo, andQAPage) to explicitly define facts for retrieval. - Factual and Trustworthy: Ground content in unique, verifiable data and clearly attribute all authors and sources.

Large Language Models explained briefly

This video explores how Retrieval Augmented Generation (RAG) works, which is the foundational technology behind Retrieval-Augmented Optimization (RAO).

Full Course: How to Get Traffic From AI And ChatGPT | LLM SEO, GEO, and AEO

Conclusion

By optimizing for retrieval and citation instead of merely a page rank, businesses can capture the new “first page” of the AI-driven internet and gain massive, targeted traffic from the millions of daily queries posed to LLMs.